Author: Cameron Harding, Storage Lead Architect | Title: Cloud Storage: Are Tin Huggers Just Shaving Yaks | Published on 3rd July 2019

Cloud Storage: Are Tin Huggers Just Shaving Yaks?

Over the past decade, we have seen constant acceleration in the transition to cloud storage. The growth in cloud storage is due to its simplicity and agility. This is evident with the continuing 40% annual growth of AWS and the expansion of Office 365, Azure and Google.

Cloud is Simple

The simplicity and convenience of cloud storage are major factors for cloud users. Aspects of data management such as availability, usability, consistency, integrity and security have always been a necessity to organisations. However, it requires a lot of administrative effort and can be time consuming. The use of cloud storage allows the organisation to concentrate on providing business value rather than spending energy on data management. To justify this change, the higher cost of cloud computing compared to on-premises computing is often offset by a reduction in administrative costs.

Software as a Service (SaaS)

SaaS, the most convenient type of cloud service, is where the application as well as the underlying infrastructure are managed and maintained by the provider. Examples of SaaS include corporate all-in-one productivity suites Office 365 and Google Apps, which are quickly replacing on-premises messaging services and document repositories.

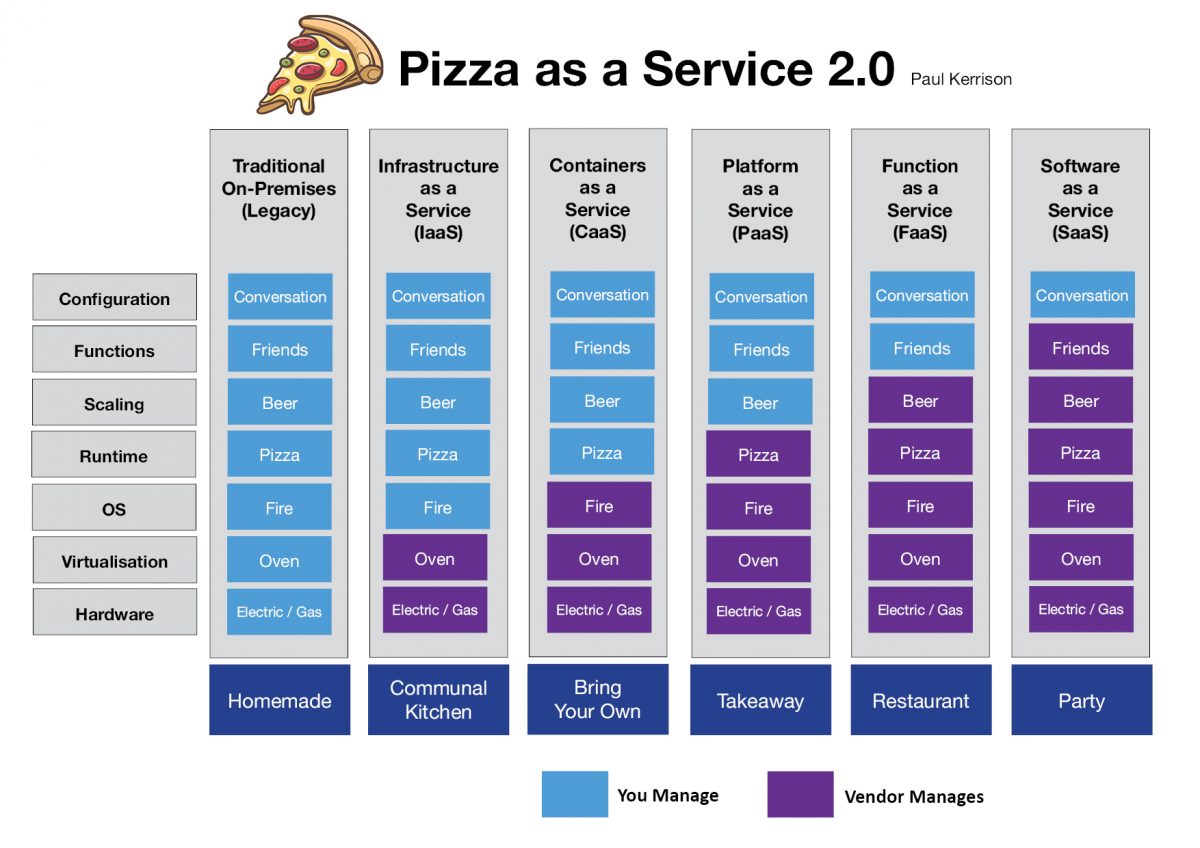

There are various types of cloud services. Standard cloud service models have been defined by NIST (IaaS, PaaS and SaaS), which Paul Kerrison’s “Pizza as a Service 2.0” explains in simple terms.

NIST Definition of Public Cloud

One of the most common capabilities lacking from public cloud services is backup. Back up is usually the responsibility of the consumer or delivered as a separate service to SaaS. Because of this, the move to cloud can often fail to meet the customers’ expectations of integrated data management as they tend to expect back up management to be included.

Traditional methods of data protection such as OS agents without source side dedupe may not be suitable as they can often result in high network costs or network congestion for consumers. The adoption rate of cloud native applications has resulted in backup and recovery vendors eagerly releasing products capable of protecting cloud native critical data.

Cloud is Agile

Another key feature of Public Cloud implementation is agility.

Its fast provisioning and abilities to expand make public cloud ideal when infrastructure requirements are unknown or where hardware locating delays can’t be tolerated. A pay-as-you go model (Cloud model where the customer is only charged based on what they use) is perfect for transient services or projects where the performance or capacity requirements change frequently or unexpectedly.

Platform as a Service (PaaS)

When it comes to application development, PaaS drives business agility.

PaaS provides managed infrastructure, operating systems, middleware and runtimes, leaving only data and applications to be managed by the customer. (see above “Pizza as a Service 2.0”). Independent providers such as Zimki, EngineYard and Heroku pioneered PaaS, with Zimki claiming that “We’ve already shaved those Yaks for you!”. The potential was noticed by other providers and it wasn’t long before AWS, Google, Microsoft, Pivotal and RedHat all released competing platforms.

How Storage can take advantage of Public Cloud

Cloud-First Policies

Cloud-First policies are policies used to assess workloads. It not only helps determine whether it is possible to run in the cloud but also whether if it should be run in the cloud.

The assessment involves a decision framework consisting of questions like:

Is a SaaS equivalent of the service available and does it provide the right cost, reliability, performance and capabilities?

Is a SaaS equivalent of the service available and does it provide the right cost, reliability, performance and capabilities?- Can the service be provided as a PaaS application, an application container or a virtual machine?

- Which hypervisors or IaaS providers will the software vendor support?

- Are there dependencies with other services that may be compromised?

- Is the service more suited to private cloud (greater control and security) or public cloud (fast provisioning, pay-as-you-go, rapid scaling)?

- Does your data governance policy prevent the use of shared infrastructure or off-shore infrastructure?

- Does the change in hosting impact any support agreements?

Asking these sorts of questions avoids the issues that many early adopters have fallen into by taking what could be considered a “Public Cloud Only” approach*. Consideration of these questions typically identifies a requirement for a multi cloud or hybrid cloud environment.

*Cloud First vs Cloud Only: A cloud-only mentality enforces cloud computing solutions on any and all enterprise operations. Many businesses that claim to be cloud-first are actually promoting cloud-only practices. The difference is more in thought process than application; cloud-only is a mandate while cloud-first is a suggested routine.

Multi-Cloud or Hybrid Cloud?

What is the difference between Multi and Hybrid Cloud?

Multi Cloud is simply the use of multiple clouds, whether they be private or public. Hybrid cloud is defined by NIST as multiple distinct clouds, bound together by technology that enables data and application portability.

Flash Storage

Flash has driven cloud storage in an unexpected way. In many circumstances, the lowering cost of flash combined with capabilities such as inline dedupe and compression has made flash storage a cheaper alternative to high performance hard disks. Most modern storage systems are highly optimised in their all-flash variants, making flash systems attractive for performance reasons.

Selecting a storage system that doesn’t support spinning rust leaves us with a problem of where to store data when a lower cost might be more of a priority than performance. A simple solution would be to bring it to the cloud. Leading storage vendors have recognised this, and many all-flash systems now have the ability to tier data out to cloud storage. This functionality is not just limited to cold data but can also be used for longer term retention of snapshots.

Read more about Flash Storage by Cameron

Portability

Cloud portability is the ability to move applications and data from one cloud to another with minimal disruption. It allows the migration of cloud services from one cloud-service-provider-to another or between a public cloud and a private cloud.

Hyperconverged and Converged Infrastructures provide on-premises private clouds that lend themselves to cloud bursting and other methods of extending workloads into hybrid cloud.

VMware

VMware

VMware has championed this mobility, making it possible to perform long distance live migrations between VMware Software-Defined Data Centres (SDDC). This portability is currently possible between private cloud, VMware on AWS and VMware on Azure with support for more providers on the way.

Microsoft

Microsoft

Microsoft have taken a different approach with Azure Stack which extends Azure into the on-premises data centre, addressing requirements such as low latency required for real time applications. Azure Stack runs on validated Hyperconverged Infrastructure from multiple hardware vendors with the management plane running in Azure.

Amazon

Amazon

Amazon has followed a similar model with AWS Outposts, which provides the flexibility of being able to run either VMware or AWS native EC2 instances on fully managed on-premises AWS hardware. From a management perspective, AWS Outposts just appear as additional cloud regions to the consumer.

Data Archiving

The collective worldwide data of 33 zettabytes in 2018 is expected to have a continued compound annual growth rate of 61%. All this data will have to be stored somewhere and one of the cheapest options is cloud archive storage. For long term archiving of cold data, services such as Amazon’s S3 Glacier and Azure’s Archive Access Tier combine low ingestion costs and extremely low costs per GB. Although tape is still popular for long term archiving, on-premises products such as Dell EMCs Isilon Archive and Data Domain provide the capability to tier out to the public cloud, extending on-premises archives beyond their physical limits.

Cameron’s Tips!

- I strongly recommend using a decision framework to assess each workload, new and existing, to determine where the best location for the data is. For most organisations, this will be a combination of SaaS and hybrid cloud IaaS. For larger organisations or organisations centred around development, there is likely to be some adoption of PaaS and container platforms.

- When determining the most suitable location for data, don’t assume that it has to be either on-premises or cloud. There are plenty of options available for providing storage tiering across hybrid cloud environments.

- Lastly, even if hybrid cloud is not the right solution for your organisation at the moment, HCI environments that enable portability and storage systems that can intelligently tier to cloud can leave your options open for the future.

Author’s Profile

Author’s Profile

Cameron Harding is Outcomex’s Storage Lead Architect. He has been evolving in the Australian IT industry for 25 years. With in-depth knowledge and technical skills, Cameron holds certifications with the industry storage leaders: Cisco, NetApp, Pure Storage, VMware, and Dell EMC.